COMS 6998: Advanced Topics in Parallel and Cloud Systems: Scalable Matrix Computation

Location/Time: F 12:10pm - 2:00pm, 829 Seeley W. Mudd Building

Instructor: Vasileios Kalantzis

Contact: vk2599(at)columbia.edu

TA: Rushin Bhatt [rsb2213(at)columbia.edu]

Course Description

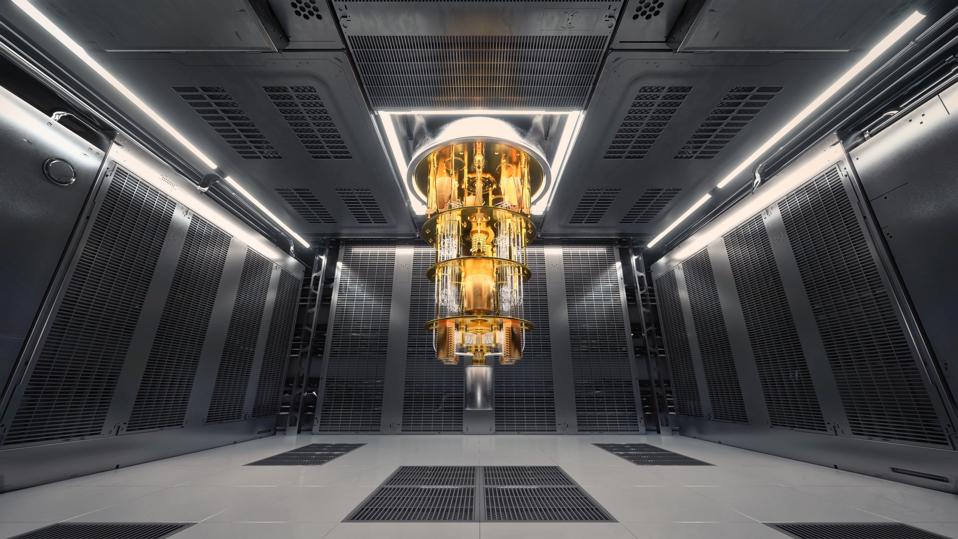

This course investigates advanced methods in large-scale matrix computations and its applications in modern and emerging computing hardware (e.g., post-von Neumann computers). Topics include randomized and asynchronous algorithms, straggler- and fault-tolerant methods, high-performance GPU/MPI implementations, and in-memory computing paradigms such as analog and quantum computing.

Prerequisites

The minimum requirements for the course are basics concepts of linear algebra and programming. Knowledge and experience with matrix computations and machine learning algorithms will be helpful. For the course, we will rely most heavily on linear algebra kernels/algorithms, but we will also learn concepts related to high-performance computing and post-von Neumann computer architectures. The course will involve rigorous theoretical analyses and some programming (practical implementation and applications).

Grading Policy

Grading is based on problem sets, project/presentation, and class participation. There will be no exams. The breakdown is as follows:- Homework & coding assignments - 50%: Four assignments including problem sets and programming exercises.

- Paper reviews & class participation - 15%

- Final project (research report + presentation) - 35%: There will be a project selection (before Spring break), and a final presentation of the projects during the last week of the semester, along with a final report submission.

Assignments are to be submitted through Canvas, and should be individual work. You are allowed to discuss the problems with your classmates and to work collaboratively. The preferred format is to upload your work as a single PDF, preferably typewritten (using LaTeX, Markdown, or some other mathematical formatting program). In general, late assignments will not receive credit.

Weekly Schedule

| Week | Title | Topics |

|---|---|---|

| 1 | Introduction & Motivation | High-Performance Computing, von Neumann computer model, accelerators and in-memory computing, performance metrics and the memory bottleneck |

| 2-3 | Randomized Matrix Algorithms | Randomized SVD and PCA, randomized butterfly transformation, probabilistic matrix inversion, random variables and Monte Carlo estimation |

| 4 | Asynchronous Iterative Algorithms | Federated learning and decentralized ML (Tsitsiklis & Bertsekas) |

| 5 | PageRank and Collaborative-filtering Recommender Systems | EigenREC model and recommendation performance metrics, random walks, hubs and authorities |

| 6 | Matrix Computations in Large Language Models | Transformers, Graphics Processing Units and Performance Metrics, Energy and Data Barriers, Importance of Linear Algebra in AI |

Useful Links

Content related to the one presented in this class:- CSCI 5304: Computational Aspects of Matrix Theory

- The Art of HPC

- Supercomputing for Artificial Intelligence

- Georg Hager's Blog: Random thoughts on High Performance Computing

Disclaimer

Any opinions, statements, or conclusions expressed in the above reports do not necessarily reflect the views of the acknowledged funding sponsors or Columbia University.